Chapter 4: Gather Credible Evidence

Vocabulary

- Credible: Able to be trusted or believed because it is reliable and based on evidence. In public health, credibility refers to information, research, or sources that are trustworthy and authoritative.

- Transparent: Open and easily understood, without hidden agendas or secrets. In public health, transparency means that processes, decisions, and information are clear and accessible to everyone involved.

- Standardized: Made consistent or uniform according to established norms or criteria. In public health, standardized procedures or measures ensure that practices and data collection methods are consistent across different settings or populations.

- Utility: The usefulness or practical value of something. In public health, utility refers to how effective a tool, intervention, or information is in achieving its intended purpose or improving health outcomes.

- Feasibility: The likelihood or practicality of successfully implementing a plan or intervention. In public health, feasibility assesses whether a proposed strategy or program can be realistically carries out given available resources, time, and support.

- Propriety: Conforming to accepted standards of behavior or ethics. In public health, propriety involves ensuring that actions, decisions, and interventions are morally and ethically appropriate, and respect the rights and dignity of individuals and communities.

(Centers for Disease Control and Prevention, 2011)

Overview

After you solidify the focus of your evaluation and identify the questions to be answered, you need to select the methods that fit the evaluation questions you have selected. Sometimes the approach is guided by a favorite method, and the evaluation is forced to fit that method. This could lead to incomplete or inaccurate answers to evaluation questions. Ideally, the evaluation questions inform the methods. It helps to identify a timeline and the roles and responsibilities of those overseeing the evaluation activity implementation.

Fitting the Method to the Evaluation Questions

The method chosen needs to fit the evaluation questions and not be chosen because they are a favored method. A misfit between evaluation question and method can lead to incomplete or inaccurate information. The method needs to be appropriate for the question in accordance with the Evaluation Standards.

To accomplish this step in your evaluation plan, you will need to do the following:

Keep in mind the purpose, logic model, program description, evaluation questions, and what the evaluation can and cannot deliver.

Confirm that the method fits the questions. There are a multitude of options: qualitative, quantitative, mixed-methods, multiple methods, naturalistic inquiry, experimental, and quasi-experimental.

Think about what will constitute credible evidence for stakeholders or users.

Identify sources of evidence (for example, persons, documents, observations, administrative databases, surveillance systems) and methods for obtaining reliable and valid data.

Identify roles and responsibilities along with timelines to ensure the project remains on-time and on-track.

Remain flexible and adaptive, and as always, transparent.

Choosing the Appropriate Methods

Will you use qualitative or quantitative methods? It is not that one is right and one is wrong, but which method or combination of methods will obtain answers to the evaluation questions.

Some options that may point you in the direction of qualitative methods include the following:

You are planning and want to assess what to consider when designing a program or initiative. You want to identify elements that are likely to be effective.

You are looking for feedback while a program or initiative is in its early stages and want to implement a process evaluation. You want to understand approaches to enhance the likelihood that an initiative (for example, policy or environmental change) will be adopted.

Something isn’t working as expected, and you need to know why. You need to understand the facilitators and barriers to implementation of a particular initiative.

You want to truly understand how a program is implemented on the ground and need to develop a model or theory of the program or initiative.

Some options that may point you in the direction of quantitative methods include the following:

You are looking to identify current and future movements or trends of a particular phenomenon or initiative.

You want to consider standardized outcomes across programs. You need to monitor outputs and outcomes of an initiative. You want to document the impact of a particular initiative.

You want to know the costs associated with the implementation of a particular intervention.

You want to understand what standardized outcomes are connected with a particular initiative and need to assess the theory of the program or initiative.

Or the best method may be a mixed methods approach where the qualitative data help understand and apply the quantitative data.

Credible Evidence

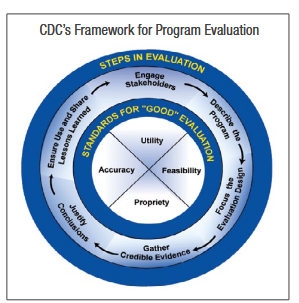

For a description of the image, access the appendix

The evidence you gather to answer your evaluation questions should be credible to the primary users of the evaluation. The determination of what is credible depends on the context and stakeholders. Gather stakeholder input as to what methods are most appropriate to the questions. Transparency and knowing limitations of the evaluation methods will help make the results more acceptable to stakeholders, whose input is important in Step 4.

Example: Decide which of the following is a more credible source of evidence about this program.

- Evaluation Question: Does our safety campaign increase use of seatbelts in our community?

- Possible data source: statewide seatbelt use data from last year

- Possible data source: observational surveys of current seatbelt use in our community

In the above example, the statewide seatbelt use data will not give evidence about our local program. The observational surveys in our own community will be a more credible source of evidence.

Measurement

If the method selected includes indicators or performance measures, the discussion of what measures to include is critical. This discussion is tied to data credibility, and there is a wide range of possible indicators that can be selected. The expertise of your ESW will help this discussion.

Data Sources and Methods

It is important to select the methods most appropriate to answer the evaluation question. The data needed should be considered for credibility and feasibility. Based on the methods chosen, you may need a variety of input, such as case studies, interviews, naturalistic inquiry, focus groups, and surveys. You may need to consider multiple data sources. Data may come from existing sources or gathered from program-specific sources. The form of the data (either quantitative or qualitative) and specifics of how these data will be collected must be defined and transparent. There are strengths and limitations to any approach, and they should be considered by your ESW. For example, using existing data sources may help reduce costs, maximize the use of existing information, and help compare yours with other programs but may not be specific enough for your program. Existing sources of data can be considered but they may not be the most appropriate for your question and method.

Not all methods fit all evaluation questions, and often a mixed-methods approach is the best option. All data collected needs to have a clear link to the evaluation question, to reduce unnecessary burden on the respondent and stakeholders. Quality assurance procedures must be put into place so that data is collected in a reliable way and checked for accuracy.

Roles and Responsibilities

It is important to identify the roles and responsibilities of staff and stakeholders from the beginning of the planning process. Ideally, work to engage your network of stakeholders throughout the development of the plan.

Evaluation Plan Methods Grid

One tool that is particularly useful in your evaluation plan is an evaluation plan methods grid. Not only is this tool helpful to align evaluation questions with methods, indicators, performance measures, data sources, roles, and responsibilities, but it can facilitate a shared understanding of the overall evaluation plan with stakeholders. The tool can take many forms and should be adapted to fit your specific evaluation and context.

EXAMPLE OF DEVELOPING A PLAN TO GATHER CREDIBLE EVIDENCE

Program: SafeTeens in Lincoln County. The county health department is starting a project to form a teen safety coalition and conduct a seatbelt campaign.

- Assess data needs by identifying indicators needed for each evaluation question

- Decide which method (qualitative or quantitative) and type of data (primary or secondary) is needed

- Decide the frequency needed for collecting data on each indicator

- Justify data collection plans to stakeholders

Evaluation Question | Indicator(s) | Method/ | Frequency | Justification |

How did the rate of seatbelt use change after the campaign? |

|

| Twice (one at baseline, one after campaign) | Rate of seatbelt use is a quantifiable number. Observational surveys before and after campaign will reveal any improvements in compliance. Teen involvement in observations will also help motivate them to comply. |

How many seatbelt citations were issued before and after campaign? |

|

| Twice (one at baseline, one after campaign) | Rate of compliance is quantitative. Data is already being kept by law enforcement. Searching records will reveal citation rates before and after the campaign, which can be compared to see if noncompliance has decreased. |

How many teens are attending coalition meetings? |

|

| Monthly Once, updated as needed Bimonthly | Engagement can be measured quantitatively by attendance at meetings. Records of attendance are needed only once per meeting. Qualitative surveys every 2 months can collect members’ comments to help gauge their level of engagement. |

Budget

The evaluation budget discussion was most likely initially started during Step 3 when the team was discussing the focus of the evaluation and feasibility issues. It is now time to develop a complete evaluation project budget based on the decisions made about the evaluation questions, methods, roles, and responsibilities of stakeholders. A complete budget is necessary to ensure that the evaluation project is fully funded and can deliver upon promises.

Evaluation Plan Tips for Step 4

Select the best method(s) that answers the evaluation question. This can often involve a mixed-methods approach.

Gather the evidence that is seen as credible by the primary users of the evaluation.

Define implementation roles and responsibilities for program staff, evaluation staff, contractors, and stakeholders.

Develop an evaluation plan methods grid to facilitate a shared understanding of the overall evaluation plan, and the timeline for evaluation activities.

At This Point, Your Plan Should Include the Following:

Identified the primary users of the evaluation.

Created the evaluation stakeholder workgroup.

Defined the purposes of the evaluation.

Described the program, including context.

Created a shared understanding of the program.

Identified the stage of development of the program.

Prioritized evaluation questions and discussed feasibility issues.

Discussed issues related to credibility of data sources.

Identified indicators and/or performance measures linked to chosen evaluation questions.

Determined implementation roles and responsibilities for program staff, evaluation staff, contractors, and stakeholders.

Developed an evaluation plan methods grid.

References

This content is provided to you freely by BYU-I Books.

Access it online or download it at https://books.byui.edu/pubh_381_readings/step_4_gather_credible_evidence.